Data Engineering Newsletter #29

Data Engineering News

1. Introducing Apache Spark 4.0

"What’s new in Apache Spark 4.0, and how can it supercharge your workloads in Databricks Runtime 17.0?”

The authors present significant advancements in Spark 4.0, including SQL language enhancements, improved Python capabilities, and streaming improvements. These updates aim to make Spark more powerful, ANSI-compliant, and user-friendly, while maintaining compatibility with existing workloads. Discover how these features can elevate your data processing experience.

https://www.databricks.com/blog/introducing-apache-spark-40

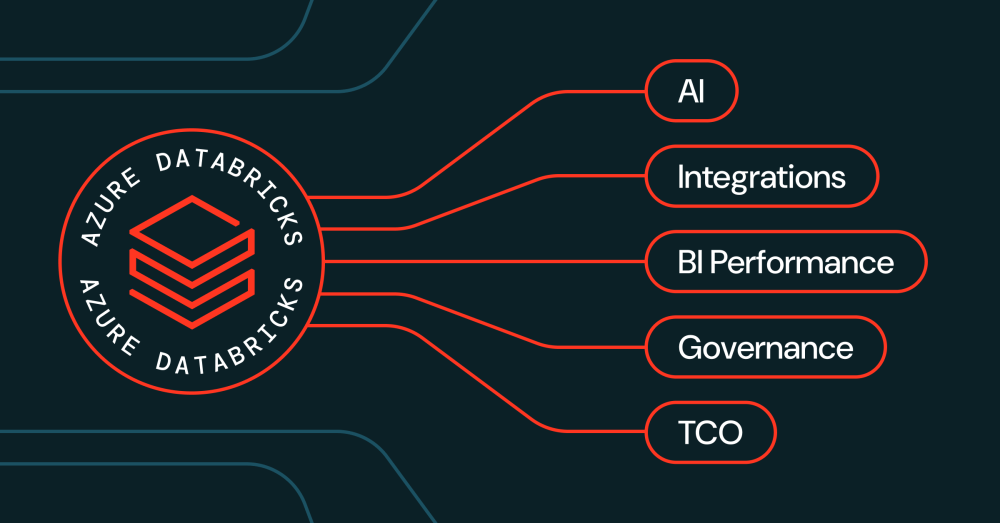

2. 5 Reasons Why Azure Databricks is the Best Data + AI Platform on Azure

Is Azure Databricks the Ultimate Data + AI Platform on Azure?

This blog explores how Azure Databricks brings together governance, performance, and integrated AI in one unified platform. With deep Azure integrations, from Power BI to OpenAI, it simplifies your entire data estate while cutting costs and boosting speed.

https://www.databricks.com/blog/5-reasons-why-azure-databricks-best-data-ai-platform-azure

3. Just make it scale: An Aurora DSQL story

Explore how Aurora DSQL delivers seamless performance, serverless scaling, and simplicity, all in one powerful story of data innovation.

In this blog, the author walks us through the origin story of Aurora DSQL: a bold re-architecture of relational databases for the serverless era. It's a story of hitting scale limits, questioning decades-old assumptions, and ultimately rewriting critical components in Rust for performance, safety, and control. A must-read for engineers interested in systems design, distributed databases, and the real-world trade-offs behind big technical bets.

https://www.allthingsdistributed.com/2025/05/just-make-it-scale-an-aurora-dsql-story.html

4. How AI will Disrupt BI As We Know It

“How exactly will AI transform Business Intelligence and reshape the way we make data-driven decisions?”

The author explains that AI, powered by context protocols like MCP, will outpace any BI tool at exploratory data analysis, breaking the “conveyor belt” of report lifecycles and forcing a new model for governance and presentation.it’s fascinating because it explains why every data practitioner (from analysts to executives) stands to gain: faster, more democratized analytics without sacrificing trust or control.

5. Iceberg?? Give it a REST!

“What if you could give Apache Iceberg a RESTful interface, how would that transform your data lake workflows?”

In this blog Anders Swanson says that the Iceberg REST Catalog (IRC) abstracts away storage complexity, letting you query and update Iceberg tables from any engine without manual file paths. It’s fascinating because it promises true multi-engine interoperability and removes last-mile friction for analytics teams.

All rights reserved Den Digital, India. I have provided links for informational purposes and do not suggest endorsement to you. All views expressed in this newsletter are my own and do not represent current, former, or future employer” opinions.