Data Engineering Newsletter #28

Data Engineering News

1. Apache Airflow 3.0 Is Here!

"Apache Airflow 3.0 has just landed; what’s the feature you’re most excited about?"

This article explores the latest release's transformative features, including service-oriented architecture, a modern React-based UI, DAG versioning, and enhanced security measures. I'd like you to explore how these advancements can improve your data engineering workflows.

https://www.linkedin.com/pulse/apache-airflow-30-here-fatih-aktas-9p50f/

2. Eliminating Bottlenecks in Real-Time Data Streaming: A Zomato Ads Flink Journey

"How did Zomato leverage Apache Flink to eliminate bottlenecks and streamline real-time data streaming in their ads platform?”

Zomato’s Data Platform team revamped its real-time Ads feedback system by migrating from a legacy Flink Java pipeline to a modern Flink SQL architecture with automated reconciliation. This overhaul reduced state size by over 99% (from 150 GB to 500 MB), cut infrastructure costs by $3,000 per month, and eliminated downtime. Key improvements included handling late events through a separate reconciliation job, publishing incremental counts, and leveraging Flink SQL’s efficiency, which resulted in greater reliability, scalability, and business trust.

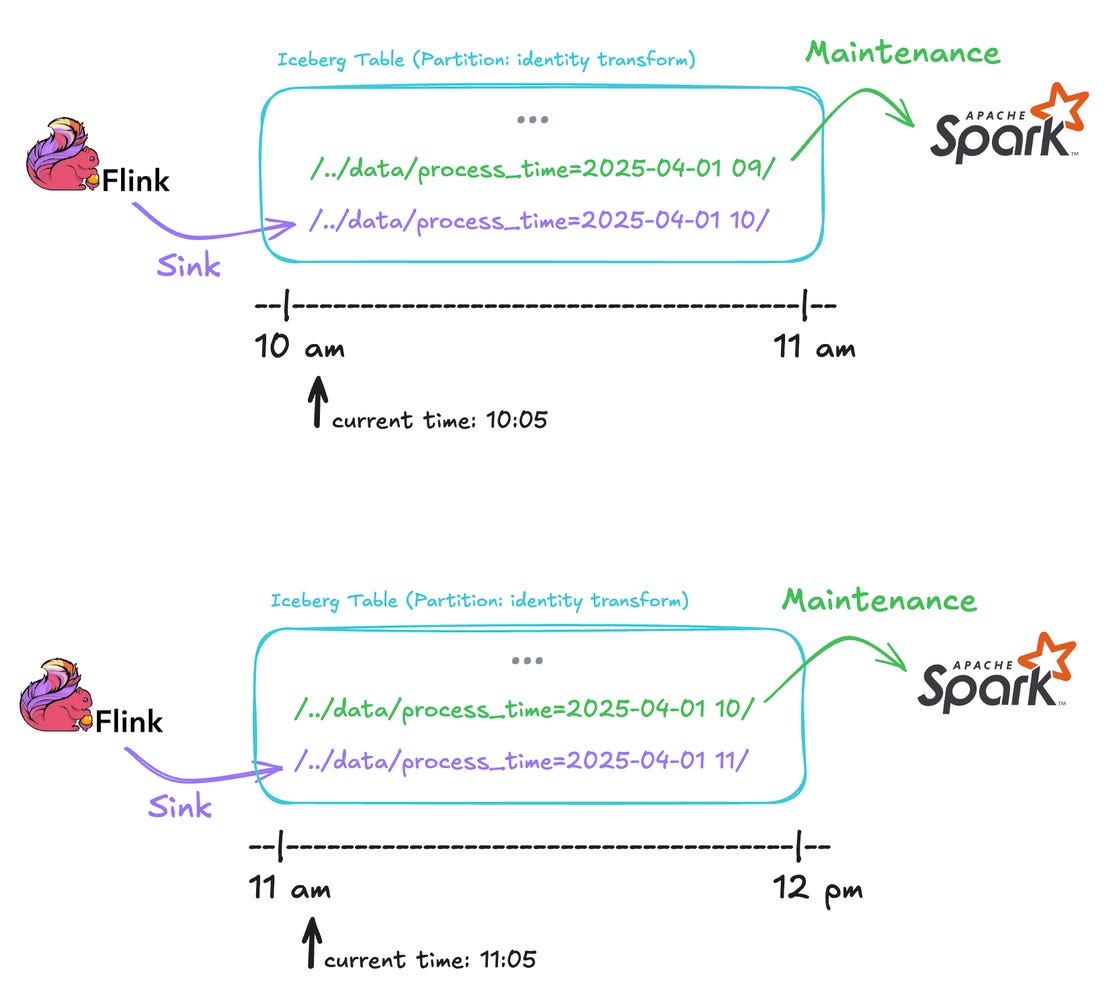

3. Iceberg Operation Journey: Takeaways for DB & Server Logs

What can the Iceberg operation journey teach us about optimizing database and server log management for better performance and reliability?

This article dives into Kakao’s real-world strategies for partitioning, compressing, and optimizing Iceberg tables for both database and server logs. It’s interesting because it reveals practical experiments, like testing zstd compression levels and file compaction routines, that directly impact performance and storage efficiency.

https://tech.kakao.com/posts/695

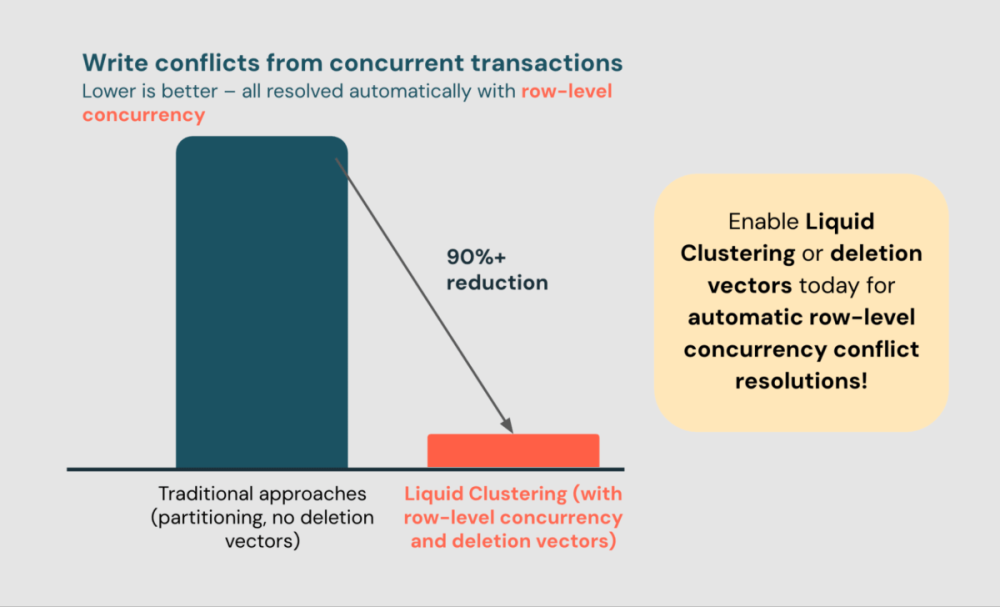

4. Deep Dive: How Row-level Concurrency Works Out of the Box

"Ever wondered how databases manage multiple users updating the same row at the same time without chaos?

The authors explore Databricks' innovative approach to row-level concurrency, highlighting how Liquid Clustering and deletion vectors enable automatic conflict resolution during concurrent data modifications. This technique streamlines data workflows, eliminating the need for complex partitioning or retry mechanisms.

https://www.databricks.com/blog/deep-dive-how-row-level-concurrency-works-out-box

5. How Agoda Uses GPT to Optimize SQL Stored Procedures in CI/CD

"How is Agoda leveraging GPT to streamline and optimize SQL stored procedures in their CI/CD pipeline?

In this article, the author explains how Agoda integrated GPT into their CI/CD pipeline, automating performance analysis and recommendations for SQL stored procedures. This approach has significantly decreased manual review times and accelerated merge request approvals.

All rights reserved Den Digital, India. I have provided links for informational purposes and do not suggest endorsement to you. All views expressed in this newsletter are my own and do not represent current, former, or future employer” opinions.