Data Engineering Newsletter #26

Data Engineering News

1. How to Pitch Your Boss to Adopt Apache Iceberg?

Want to modernize your data lake? Here's how to pitch Apache Iceberg to your boss.

In this blog, the author discusses that Apache Iceberg is quietly becoming the core of a more open, flexible, and cost-efficient data future. It challenges the status quo of traditional warehouses by decoupling storage from computing, enabling true interoperability without vendor lock-in.

https://blog.det.life/how-to-pitch-your-boss-to-adopt-apache-iceberg-6da93c969f67

2. How AI will disrupt data engineering as we know it

Is AI about to change data engineering forever?

Tristan Handy says AI won’t replace data engineers; it will reshape their work entirely, automating tasks and unlocking new roles in platform engineering, automation, and business enablement. It’s a deep dive into how frameworks like dbt and tools like AI copilots are converging to change everything fast.

https://www.getdbt.com/blog/how-ai-will-disrupt-data-engineering

3. Upskilling data engineers

How do you grow as a data engineer in today’s fast-changing landscape?

Georg Heiler discusses that upskilling in data engineering isn’t about chasing the latest tools but mastering fundamentals, thinking locally, and designing scalable, testable systems. From asset graphs to metadata, mentorship to mindset, this is a practical, honest guide for anyone who wants to grow beyond buzzwords.

https://georgheiler.com/post/learning-data-engineering

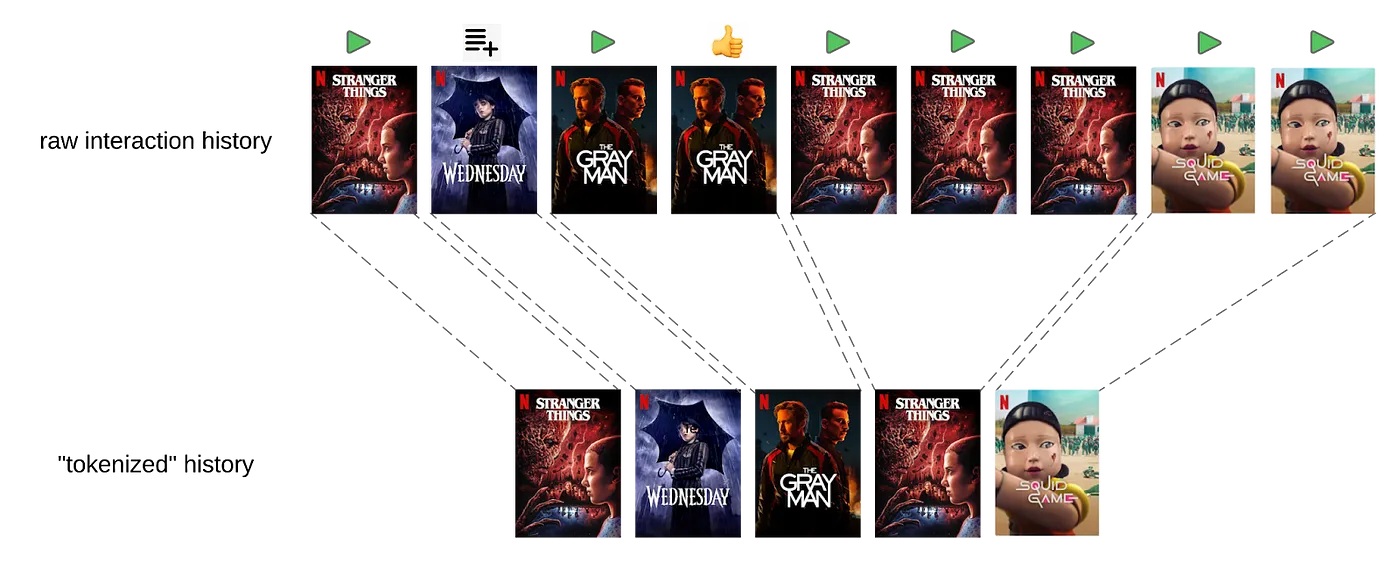

4. Foundation Model for Personalized Recommendation

What if a single foundation model could power all of Netflix’s personalized recommendations?

In this article, Netflix applies LLM-style architecture to build a foundation model for personalized recommendations, moving beyond dozens of task-specific models to a unified, scalable system. By tokenizing user interactions and blending metadata with behavioral data, Netflix redefines how deep personalization works globally.

https://netflixtechblog.com/foundation-model-for-personalized-recommendation-1a0bd8e02d39

5 How to ETL at Petabyte-Scale with Trino

How do you run fast, reliable ETL at a petabyte scale without breaking the system or the budget?

The author says that Trino, with the proper tuning and architecture, can power efficient, large-scale ETL pipelines using nothing more than SQL, intelligent compaction, and thoughtful configuration. It’s a rare deep dive into how Salesforce handles petabyte ingestion quickly and clearly.

https://engineering.salesforce.com/how-to-etl-at-petabyte-scale-with-trino-5fe8ac134e36/

All rights reserved Den Digital, India. I have provided links for informational purposes and do not suggest endorsement. All views expressed in this newsletter are my own and do not represent current, former, or future employer” opinions.