Data Engineering Newsletter #24

Data Engineering News

1. Should Stakeholders be Writing SQL for Self-Service?

"Should business stakeholders write their own SQL, or is that a data team's job?"

This post explores the quiet chaos that unfolds when non-analysts write SQL, from silent bugs and broken logic to rising costs and conflicting truths. With stories from Amazon, eBay, and VMware, Brandon shows how good intentions in self-service can lead to dangerous outcomes.

https://medium.com/@analyticsmentor/should-stakeholders-be-writing-sql-for-self-service-289561fbbbbf

2. Introducing Meta’s Llama 4 on the Databricks Data Intelligence Platform

"Ready to unlock next-gen AI with Meta’s Llama 4 on Databricks?"

This blog explores how Meta’s Llama 4, now live on Databricks, lets you build powerful, multimodal AI agents grounded in your own data. The authors explore how features like Unity Catalog, Mosaic AI, and Test-Time Adaptive Optimization make it possible to scale safely, customize fast, and deploy without infra complexity.

https://www.databricks.com/blog/introducing-metas-llama-4-databricks-data-intelligence-platform

3. Announcing Anthropic Claude 3.7 Sonnet is natively available in Databricks

"What can your enterprise build with Claude 3.7 Sonnet now natively available on Databricks?"

The author says that Claude 3.7 Sonnet, Anthropic’s most advanced model, is now natively available in Databricks, unlocking hybrid reasoning, agentic workflows, and full governance at scale. You can now build intelligent, domain-specific AI agents directly on your enterprise data without managing infrastructure.

https://www.databricks.com/blog/anthropic-claude-37-sonnet-now-natively-available-databricks

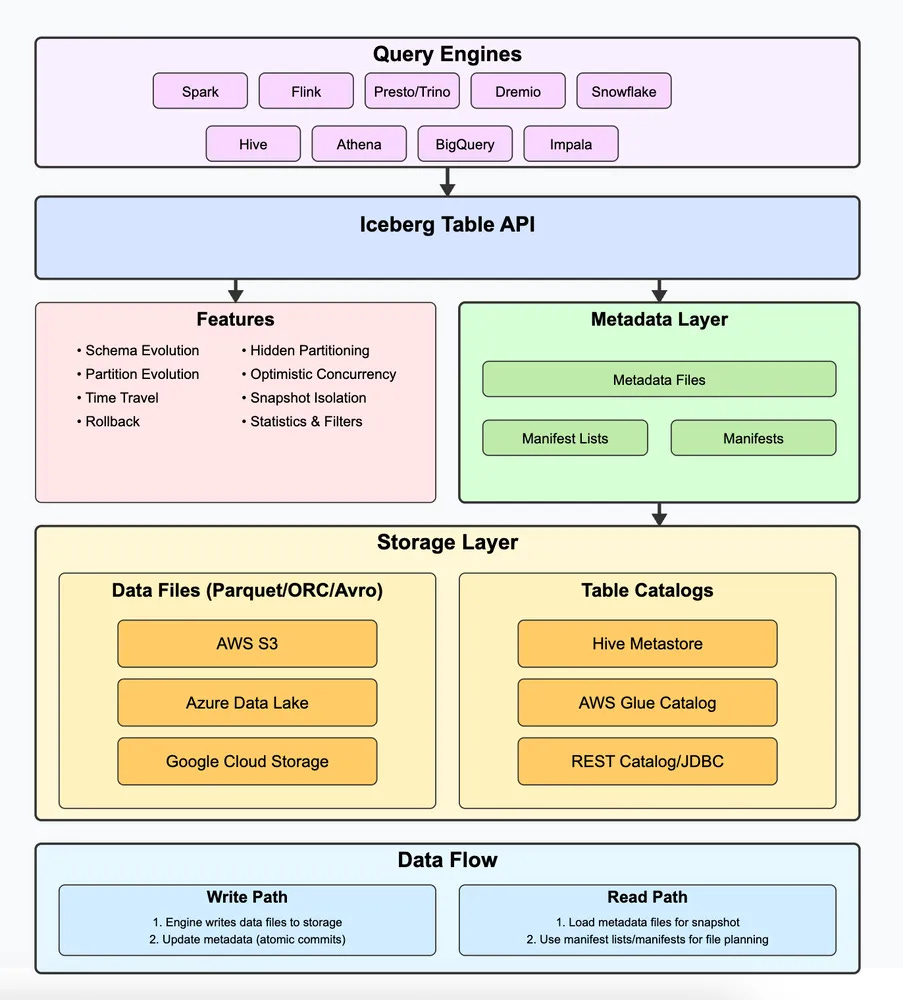

4. How to Load Data into Apache Iceberg: A Step-by-Step Tutorial

"Curious how to load data into Apache Iceberg without getting lost in the lake? 🧊

The author says that loading data into Iceberg isn't just about writing files; it's about mastering metadata, schema evolution, and real-time ingestion. Whether you're using Spark, Flink, or Python, this tutorial walks you through it all with clarity and depth. It's a must-read if you care about building future-proof data lakes.

https://estuary.dev/blog/loading-data-into-apache-iceberg/

5. How Apache Iceberg Powers the Data Lake and Trino Makes It Explorable

"Ever wondered how Apache Iceberg turns your data lake into a powerful warehouse, and how Trino lets you query it like a pro?”

Rakesh Rathi says that Apache Iceberg brings order, version control, and safety to your data lake, while Trino gives you the power to query it all with simple SQL. If you’ve ever dreamed of joining S3-based Iceberg tables with live PostgreSQL data without moving anything, this blog shows you how. It's a must-read for anyone building the next-gen data platform.

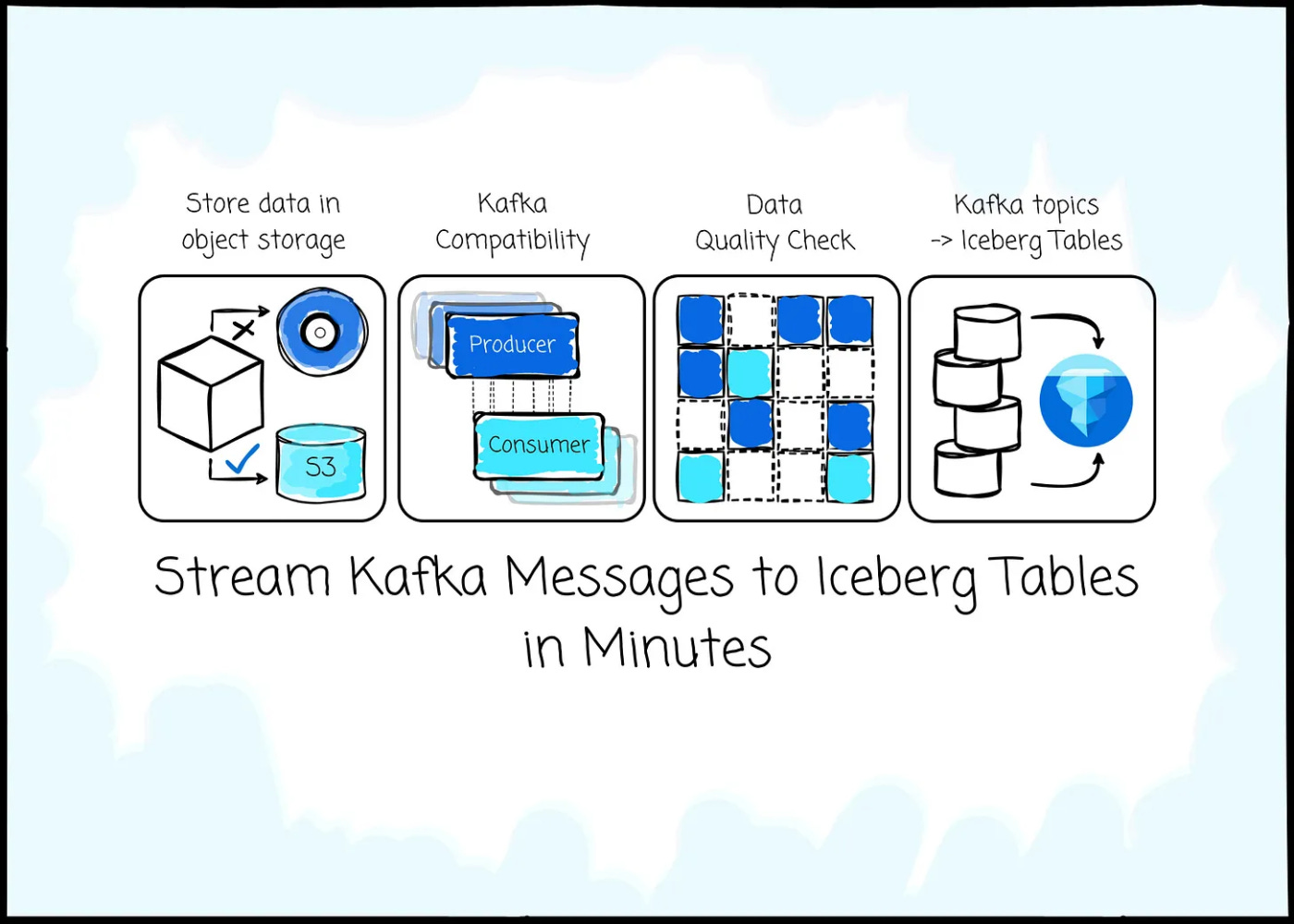

6. Bufstream: Stream Kafka Messages to Iceberg Tables in Minutes

"Want to stream Kafka messages into Apache Iceberg tables in just minutes, without the usual complexity?”

In this article, Bufstream reimagines Kafka for the cloud: cheaper, stateless, schema-aware, and natively integrated with Apache Iceberg. It replaces the need for fragile pipelines and lets you stream Kafka messages into Iceberg tables in minutes. If you're serious about simplifying real-time data architecture, this read is for you.

https://blog.det.life/bufstream-stream-kafka-messages-to-iceberg-tables-in-minutes-6c60c470e67f

All rights reserved Den Digital, India. I have provided links for informational purposes and do not suggest endorsement. All views expressed in this newsletter are my own and do not represent current, former, or future employer” opinions.